Big Data is the term used to describe large, complicated, and unstructured data collections that conventional data processing technologies cannot process. Such software falls short when handling significant data acquisition, analysis, curation, sharing, visualization, security, and storage. Thus, the need for Hadoop experts is in huge demand. So, join the Hadoop course in Bangalore!

Any attempt to employ conventional software in extensive data integration results in errors and clunky operations due to its unstructured nature. In contrast to how relational databases are often utilized for data management, big data platforms aim to handle data more effectively while reducing the margin of error.

Over 150 zettabytes (150 trillion gigabytes) of data will need to be handled and evaluated by 2025, according to a 2019 extensive data article published in Forbes. In addition, 40% of the businesses surveyed said they frequently need to manage unstructured data. Data handling will face significant problems due to this demand, so frameworks like Hadoop are necessary. These technologies simplify the management of data processing and analysis. So, enrolling in Hadoop Course in Bangalore becomes essential.

What is Hadoop?

Apache Hadoop is a system for processing large datasets in parallel across many machines in a cluster with minimal code. In Hadoop training in Bangalore, you will understand its four modules, such as:

Hadoop Standard

A group of libraries and tools that support other Hadoop modules.

HDFS, the Hadoop Distributed File System

A cluster-based, distributed, fault-tolerant, auto-replicating file system that makes it easy to access stored data.

YARN in Hadoop

A processing layer that manages resources, schedules jobs, and attends to diverse processing requirements.

Hashtable MapReduce

IBM refers to MapReduce as “the heart of Hadoop.” A cluster of nodes or machines may process big datasets using this batch-oriented inflexible programming methodology. Mapping and Reducing are the two stages of data processing.

Utilizing the Mapper function, the Mapping phase handles discrete data sets dispersed throughout the cluster. The data must be aggregated during the Reducing step using a reducer function.

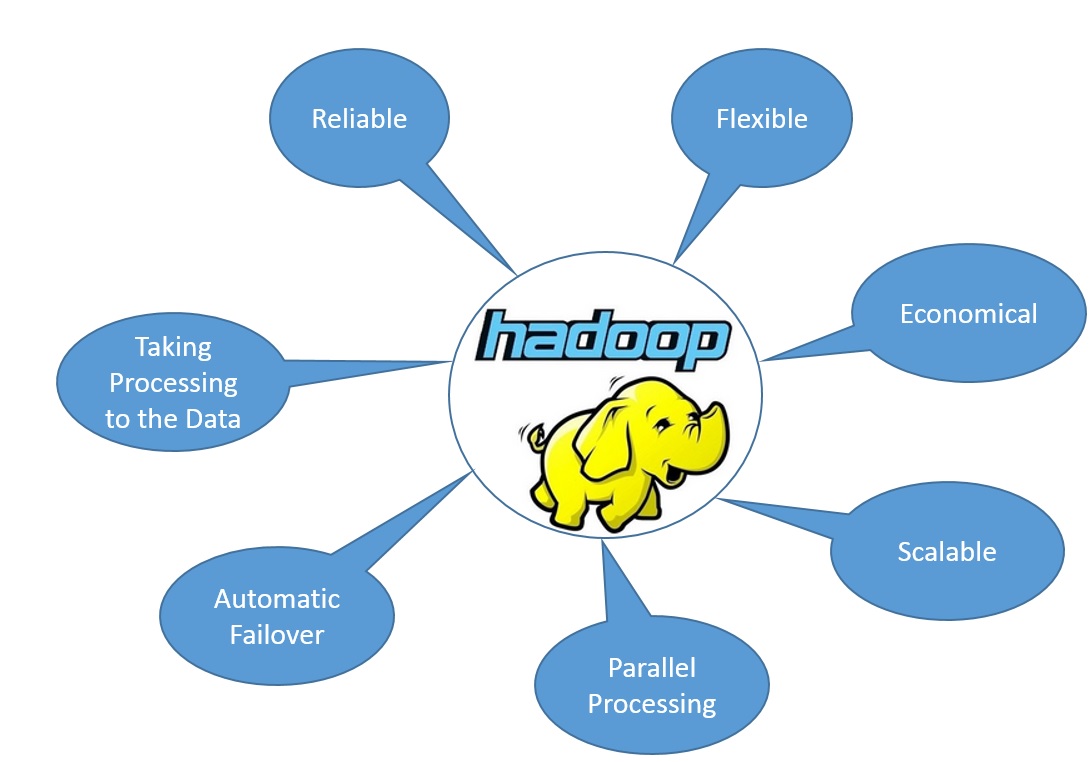

Benefits of Hadoop

- Gains from Using Hadoop

It is impossible to overestimate the importance of quick data processing in business. Although other frameworks help achieve this goal, enterprises use Hadoop for the following reasons:

- Scalability

Petabytes of data stored in the HDFS can be processed by businesses and used for valuation purposes.

Flexibility

Multiple data sources and data kinds are readily accessible.

Speed

Speedy processing of vast data is made possible by parallel processing and little data transportation.

- Adaptability

Several coding languages, including Python, Java, and C++, are supported.

So, what are you waiting for? Enroll in Hadoop training in Bangalore now with Inventateq and accomplish your all goals in no time.

by Sowmya